While it’s fundamentally true that the Learning Records Service (LRS) is a tool for storing activity data, it doesn’t mean you should store everything possible in the vague hope that the data might give you the answer you seek.

Having supported many organisations in getting to grips with learning analytics, I find myself asking “how did you get here?” and “why did you want to do it in the first place?” Many folks can find themselves dealing with seemingly endless buckets of data coming from all kinds of sources.

Really think about what data is actually available to you, what is possible to link together, and what hypothesis you could look to prove

What problem are you trying to solve?

I find myself asking “what problem are we trying to solve?” of all sorts of people in L&D time and time again. Typically I respond to two big problems; I don’t know what I don’t know and I don’t know if my training is working. Let’s break these questions down into something that we can make useful:

The “I don’t know what I don’t know” problem

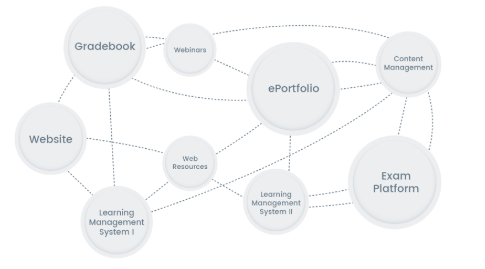

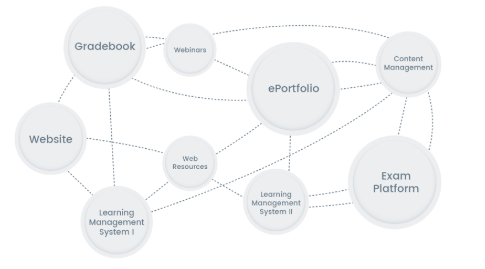

This is super common and it’s understandable why it’s such a go-to option for an LRS. Years of solutionising and trying to find a platform that gives you what you need has left you with a setup that looks a bit like this:

You’ve got lots of systems, made by lots of vendors and none of them talk to each other. You’ve been tasked with understanding what happens in your learner journey, but the idea of doing so is almost impossible as the record of the activity is spread around across these platforms with no understanding of each other’s structure.

Simple solution, point everything at the LRS – job done. But wait, don’t I just have two copies of the data and still I’m none the wiser on what any of it means? Yep, just another platform to maintain.

So what do I store? There are a few ways to answer that, but we have to think about it in the form of a problem.

Problem: I don’t know what’s happening with my content as there is no data for it.

Explanation: Content doesn’t store the interactions in a database, so it’s difficult to know a great level of detail about it. We don’t know much about drop-offs or assessment interactions without a method to capture that information.

Solution: xAPI is great for this. It gives a detailed look at the interactions with the content and stores that in the LRS. This is a sensible use case as we aren’t duplicating data and we can start to understand what’s happening.

So that’s great, we have a sensible step. Next is to make sure we have some sort of hypothesis to prove, there is no logic in simply going through each platform one by and building integrations until you’re lost in a sea of data, weary from months of custom development.

The ‘I don’t know if my training is working’ problem

Another common problem is demonstrating the return on investment from a financial perspective. What you often find is that many of the courses and workshops that are delivered aren’t necessarily suited to measuring the impact of the training against a monetary value.

Also like many people have pointed out, ROI doesn’t have to mean making more money, it could be reducing churn or measuring an increase in employee confidence.

The key here is to really think about what data is actually available to you, what is possible to link together, and what hypothesis you could look to prove before you start chucking every bit of data into the LRS. There is an argument for data duplication here, but only if it can be justified by a test that you want to carry out.

Problem: I need to prove what the impact of my training is on other areas of the business.

Explanation: There is a task to demonstrate that training delivered has a positive impact on a particular area of the business. It is likely that the data does not all come from a single source so it is important to link this together.

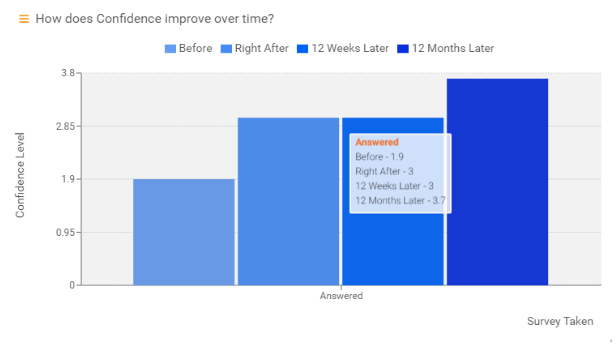

Solution: Decide on something that you want to test like “we want to see if employee confidence in a subject improves in the period of time after the course.” You’ll need to take a dataset from before you implemented the training which might be pre-course surveys or current result data that the business uses to measure success, ensure that the course is designed to have an impact on the area that you’re looking to test and then decide on the completion measurement and whether there are periods of time you wish to test for.

At this point, you can decide on the storage method. It might be that the xAPI is suitable as a tool to standardise the shape of the data for easier analysis, or it might be that you are looking to make use of the LRS simply as a validation tool for your content before handing it off to your existing reporting tools which should be possible with any modern LRS. My message here is to use the tools in the best way, not just because you can.

What’s next?

Now, I’ve only provided a couple of examples of problems in L&D that I’ve experienced, I know there are many more. I’m also certain that there are many that I’ve yet to come across.

One thing I’m sure about though is that we have to really think about learning analytics as a series of problems that we are trying to solve, not just as a place to regurgitate the same old boring reports that we always have done.

What’s the point in measuring completions if there is no measurable impact? We might as well not bother. Get creative, experiment and find answers to important questions. Trust me it’s much more fun that way.

So the next time you’re thinking about learning analytics, maybe come up with a little checklist like the below before you commit lots of time and energy to the journey.

Checklist

- What is the problem I’m trying to solve?

- Does it have a positive impact?

- Is the training I’m delivering designed around solving this specific issue?

- Do I have the data to demonstrate the existing state before the training takes place?

- Can I get the data to test whether the training makes a difference?

- Where is the best place to store my data to carry out my analysis?

While it’s fundamentally true that the Learning Records Service (LRS) is a tool for storing activity data, it doesn’t mean you should store everything possible in the vague hope that the data might give you the answer you seek.

Having supported many organisations in getting to grips with learning analytics, I find myself asking “how did you get here?” and “why did you want to do it in the first place?” Many folks can find themselves dealing with seemingly endless buckets of data coming from all kinds of sources.

Really think about what data is actually available to you, what is possible to link together, and what hypothesis you could look to prove

What problem are you trying to solve?

I find myself asking “what problem are we trying to solve?” of all sorts of people in L&D time and time again. Typically I respond to two big problems; I don’t know what I don’t know and I don’t know if my training is working. Let’s break these questions down into something that we can make useful:

The “I don’t know what I don’t know” problem

This is super common and it’s understandable why it’s such a go-to option for an LRS. Years of solutionising and trying to find a platform that gives you what you need has left you with a setup that looks a bit like this:

You’ve got lots of systems, made by lots of vendors and none of them talk to each other. You’ve been tasked with understanding what happens in your learner journey, but the idea of doing so is almost impossible as the record of the activity is spread around across these platforms with no understanding of each other’s structure.

Simple solution, point everything at the LRS – job done. But wait, don’t I just have two copies of the data and still I’m none the wiser on what any of it means? Yep, just another platform to maintain.

So what do I store? There are a few ways to answer that, but we have to think about it in the form of a problem.

Problem: I don’t know what’s happening with my content as there is no data for it.

Explanation: Content doesn’t store the interactions in a database, so it’s difficult to know a great level of detail about it. We don’t know much about drop-offs or assessment interactions without a method to capture that information.

Solution: xAPI is great for this. It gives a detailed look at the interactions with the content and stores that in the LRS. This is a sensible use case as we aren’t duplicating data and we can start to understand what’s happening.

So that’s great, we have a sensible step. Next is to make sure we have some sort of hypothesis to prove, there is no logic in simply going through each platform one by and building integrations until you’re lost in a sea of data, weary from months of custom development.

The 'I don’t know if my training is working’ problem

Another common problem is demonstrating the return on investment from a financial perspective. What you often find is that many of the courses and workshops that are delivered aren’t necessarily suited to measuring the impact of the training against a monetary value.

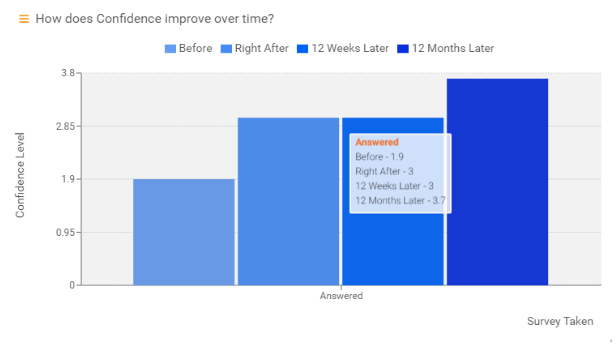

Also like many people have pointed out, ROI doesn’t have to mean making more money, it could be reducing churn or measuring an increase in employee confidence.

The key here is to really think about what data is actually available to you, what is possible to link together, and what hypothesis you could look to prove before you start chucking every bit of data into the LRS. There is an argument for data duplication here, but only if it can be justified by a test that you want to carry out.

Problem: I need to prove what the impact of my training is on other areas of the business.

Explanation: There is a task to demonstrate that training delivered has a positive impact on a particular area of the business. It is likely that the data does not all come from a single source so it is important to link this together.

Solution: Decide on something that you want to test like “we want to see if employee confidence in a subject improves in the period of time after the course.” You’ll need to take a dataset from before you implemented the training which might be pre-course surveys or current result data that the business uses to measure success, ensure that the course is designed to have an impact on the area that you’re looking to test and then decide on the completion measurement and whether there are periods of time you wish to test for.

At this point, you can decide on the storage method. It might be that the xAPI is suitable as a tool to standardise the shape of the data for easier analysis, or it might be that you are looking to make use of the LRS simply as a validation tool for your content before handing it off to your existing reporting tools which should be possible with any modern LRS. My message here is to use the tools in the best way, not just because you can.

What’s next?

Now, I’ve only provided a couple of examples of problems in L&D that I’ve experienced, I know there are many more. I’m also certain that there are many that I’ve yet to come across.

One thing I’m sure about though is that we have to really think about learning analytics as a series of problems that we are trying to solve, not just as a place to regurgitate the same old boring reports that we always have done.

What’s the point in measuring completions if there is no measurable impact? We might as well not bother. Get creative, experiment and find answers to important questions. Trust me it’s much more fun that way.

So the next time you’re thinking about learning analytics, maybe come up with a little checklist like the below before you commit lots of time and energy to the journey.

Checklist

- What is the problem I’m trying to solve?

- Does it have a positive impact?

- Is the training I’m delivering designed around solving this specific issue?

- Do I have the data to demonstrate the existing state before the training takes place?

- Can I get the data to test whether the training makes a difference?

- Where is the best place to store my data to carry out my analysis?